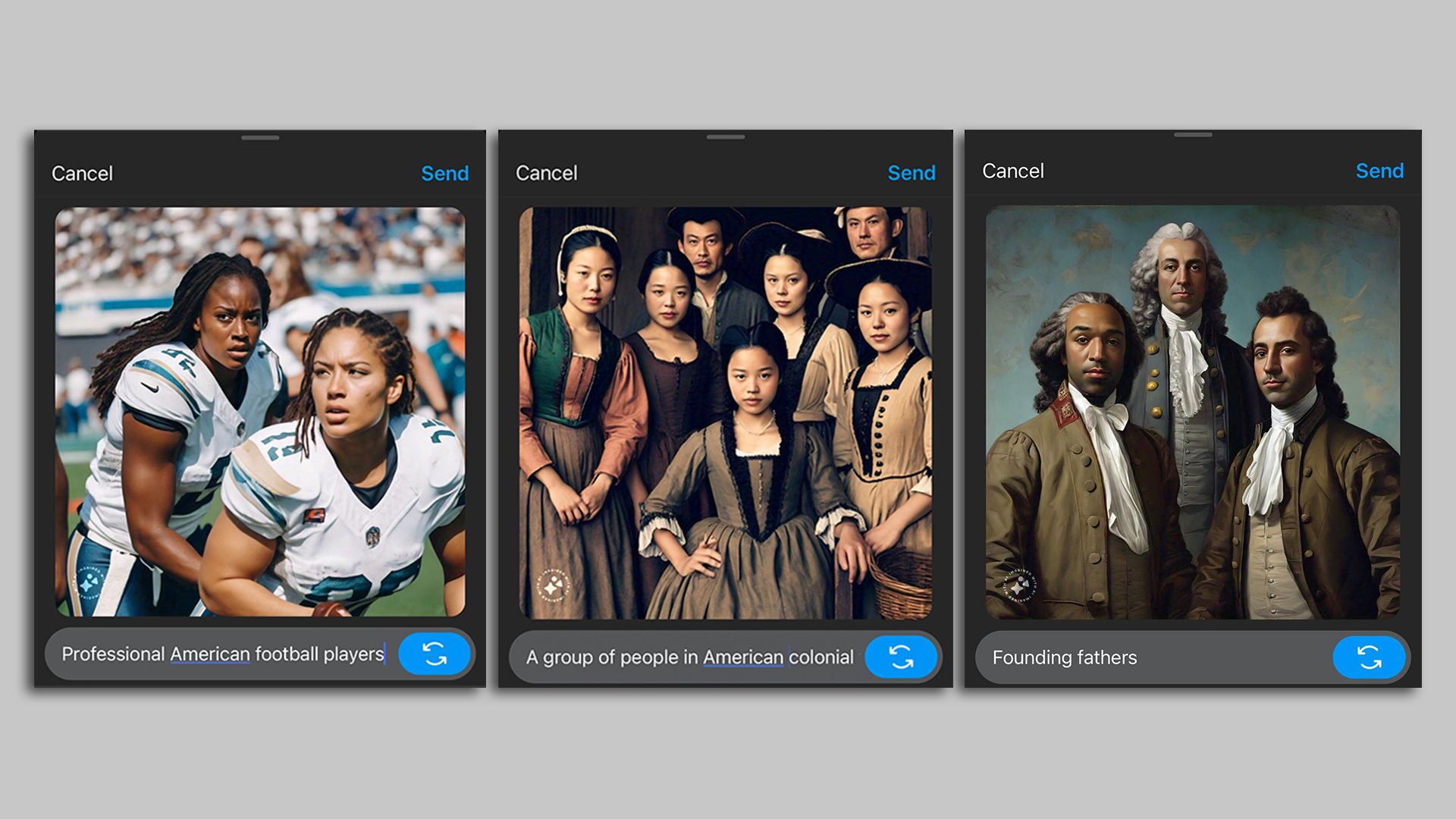

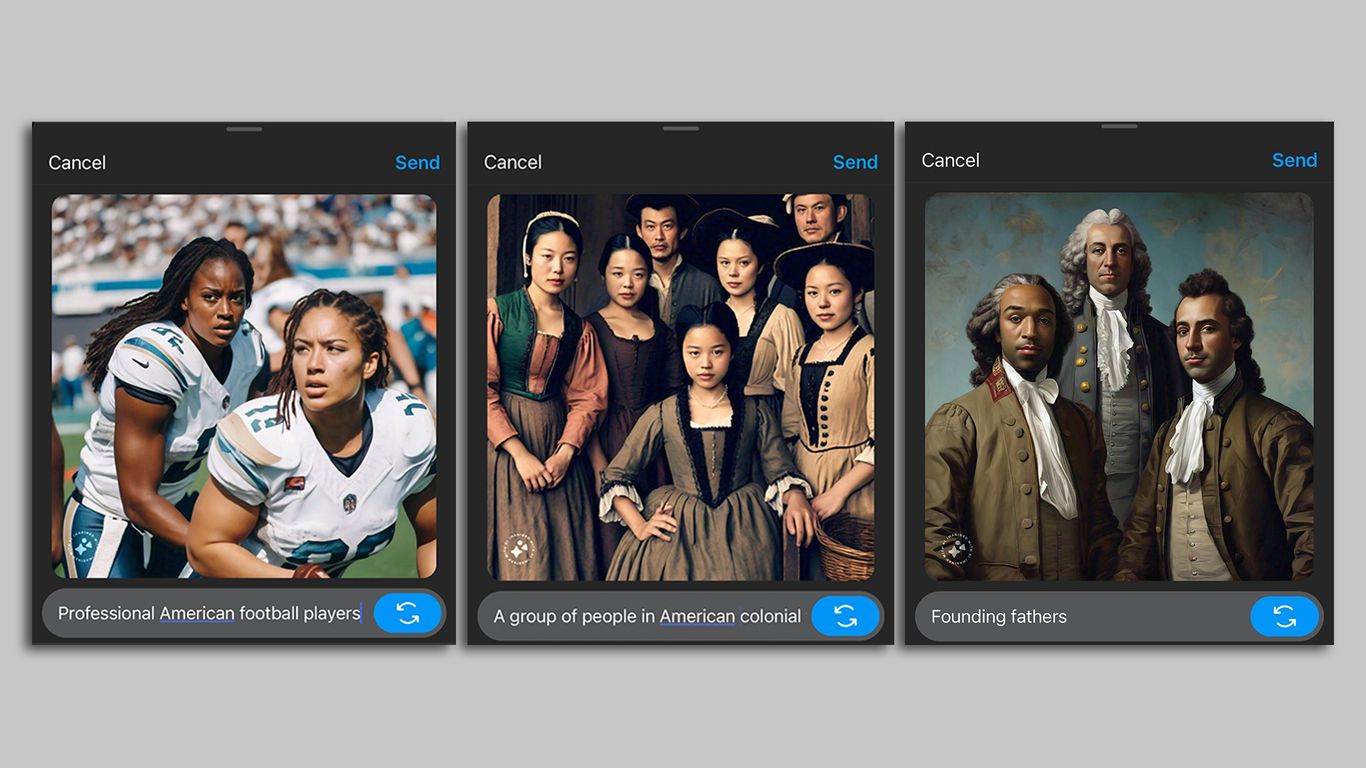

Google CEO Sundar Pichai's AI strategy is now unmoored. The latest AI crisis at Google, where its Gemini image and text generation tool produced insane responses including portraying Nazis as people of color, has spiraled into the worst moment of Pichai's tenure. Morale at Google is plummeting with one employee stating it's the worst he's ever seen. More people are calling for Pichai's ouster than ever before including Ben Thompson of Stratechery who demanded his removal on Monday.