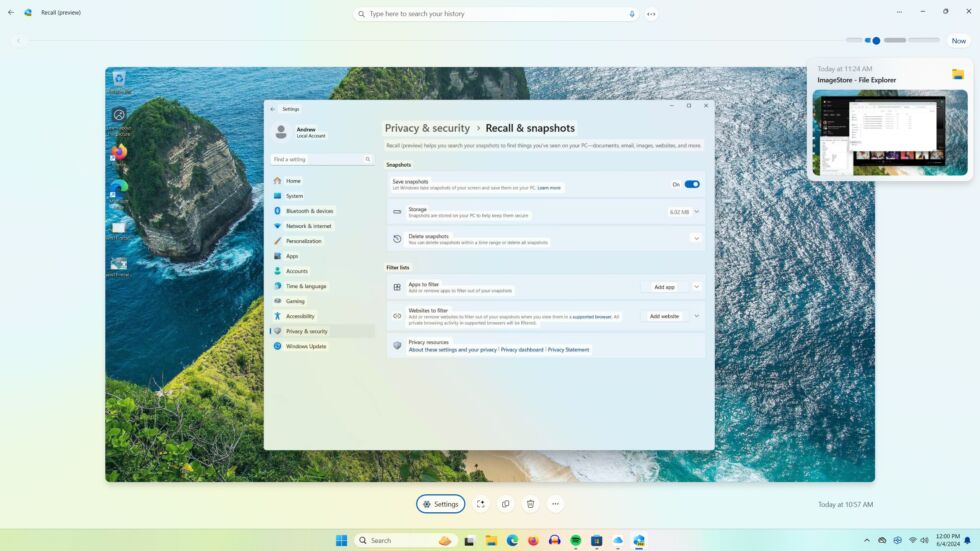

In a world where data privacy and security have become increasingly important, Microsoft's new Recall feature in Windows 11 has raised significant concerns among users and cybersecurity experts. The feature, which is set to debut on June 18th as part of the Copilot Plus PCs, uses AI technology to take screenshots of everything a user does on their computer and saves them along with personal data in a locally stored SQLite database. While Microsoft maintains that Recall is an optional experience with privacy controls, critics argue that it poses a significant security risk, especially given the vulnerabilities found in the current version of the feature.

Cybersecurity expert Kevin Beaumont discovered several vulnerabilities in Recall, including the fact that data is stored in plain text form and extracting information from encrypted data appears relatively trivial. This raises concerns about how easily unauthorized users could access a user's database if they have local access or infect the PC with an info-stealer virus.

Furthermore, Recall uses Optical Character Recognition (OCR) to extract text from screenshots for faster searches. However, this technology has also been criticized for its potential security flaws and privacy concerns. By default, Recall stores a large amount of personal data including sensitive information such as usernames, passwords, and health care information.

Security researcher Kevin Beaumont's findings have intensified calls for Microsoft to recall the Recall feature due to its potential security nightmare and privacy risks. Analysts at Directions on Microsoft have also questioned whether Microsoft should recall the feature, highlighting the investments the company has made in compliance services such as Purview, which allows compliance teams to monitor user activities. However, despite these concerns, Microsoft has not yet announced any plans to recall or modify the Recall feature.

In addition to privacy and security concerns, Recall has also been criticized for its potential impact on productivity. Some experts argue that the feature could make it easier for malware and attackers to steal information by providing a comprehensive record of a user's activity on their computer. Meanwhile, Microsoft maintains that Recall is designed to provide an optional experience with privacy controls, disabling certain URLs and apps, and not storing protected content like passwords or financial account numbers in its screenshots.

Overall, the controversy surrounding Microsoft's Recall feature highlights the ongoing struggle to balance innovation and technology with privacy and security concerns. As more companies adopt AI-powered technologies, it is crucial that they take into account the potential risks and vulnerabilities associated with these tools to ensure that users' data remains safe and secure.

:no_upscale():format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25475603/recall.gif)

/cdn.vox-cdn.com/uploads/chorus_asset/file/25476465/windowscopilot.png)