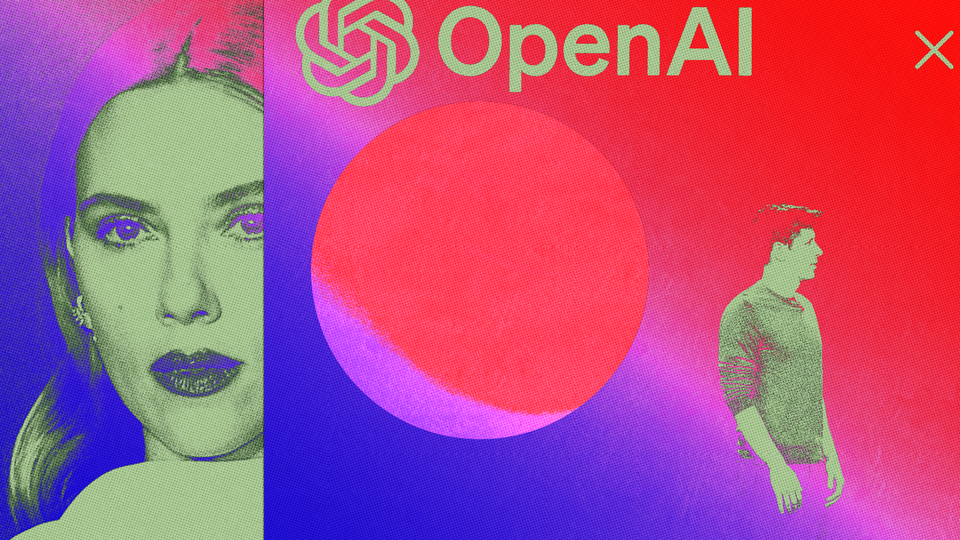

In a recent turn of events, Hollywood actor Scarlett Johansson found herself at the center of a controversy involving OpenAI and its latest digital assistant, named 'Sky'. According to reports, Johansson had previously declined an invitation from OpenAI to license her voice for their new AI system. However, it was later discovered that Sky's voice bore a striking resemblance to Johansson's own. This revelation led the actress to threaten legal action against OpenAI.

OpenAI CEO Sam Altman initially denied any wrongdoing and claimed that they had already cast a different voice actor for Sky before reaching out to Johansson. However, in response to the public backlash, Altman announced that OpenAI would be taking down Sky's voice 'out of respect' for Johansson.

This incident has raised concerns about the use of digital replication technologies and their potential impact on performers' livelihoods. The SAG-AFTRA union, which represents thousands of Hollywood actors, welcomed OpenAI's decision to pause using Sky and looks forward to working with them on protecting performers' voices and likenesses.

The rapid rise of voice imitation technologies has caused anxiety among many in the entertainment industry. Last summer, SAG-AFTRA went on strike in part due to concerns over the future of generative AI in the entertainment industry.

This is not the first time OpenAI has been involved in a lawsuit regarding the use of creators' data without consent. The company has also faced criticism for its end goal of building an artificial general intelligence (AGI) that could significantly impact various aspects of human life, including jobs, science, and medicine.

As this story continues to unfold, it remains to be seen how the industry will respond and what measures will be taken to protect performers' rights in the age of AI.