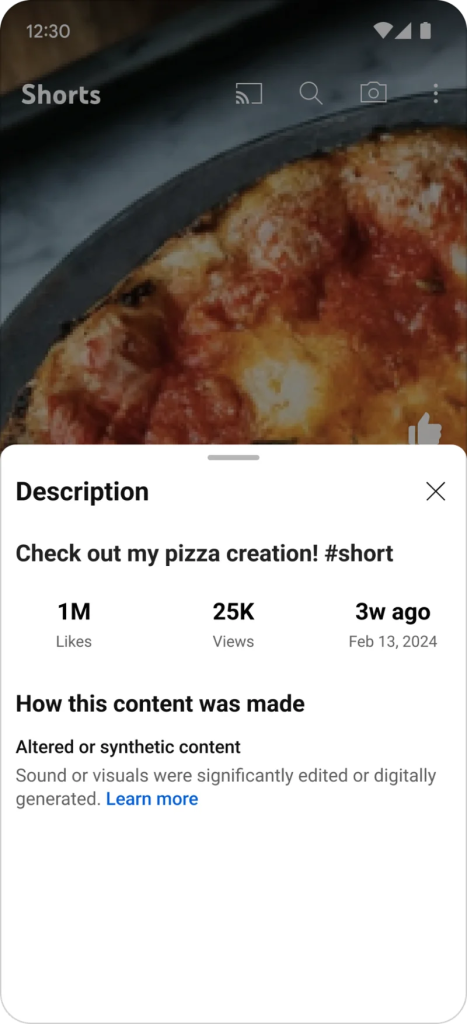

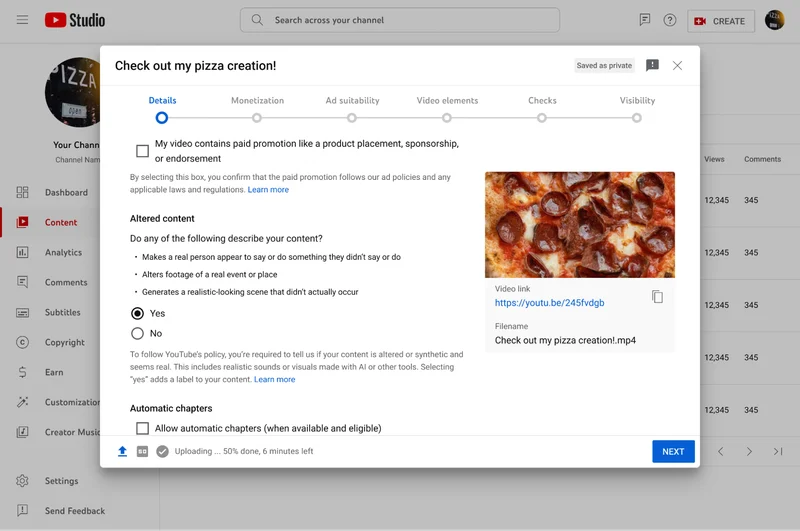

YouTube has announced that it will now require creators to disclose the use of altered or synthetic content, including AI. The label will appear as labels in the expanded description or on the front of the video player and is meant to help prevent users from being confused by synthetic content amid a proliferation of new, consumer-facing generative AI tools. YouTube creators will be required to identify when their videos contain AI-generated or otherwise manipulated content that appears realistic so that YouTube can attach a label for viewers. The platform announced that the update would be coming in the fall as part of a larger rollout of new AI policies.