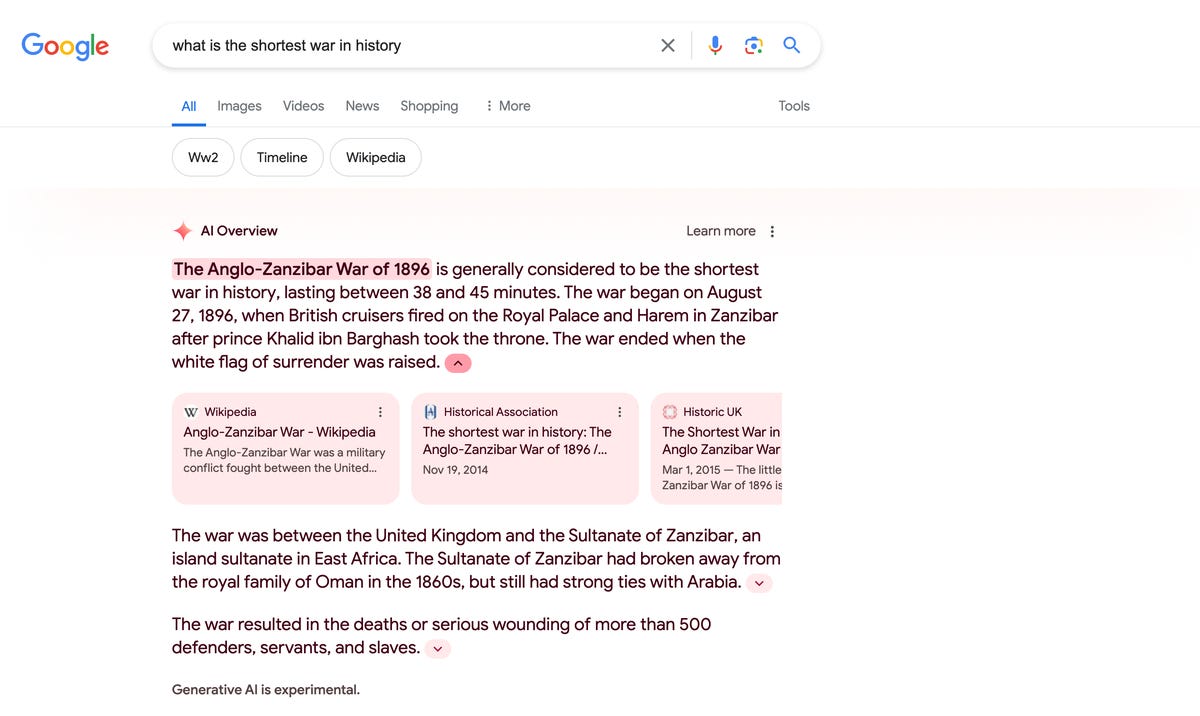

Google's AI-generated answers to search queries, known as AI Overviews, have been a topic of controversy in recent weeks due to their accuracy and potential impact on the media industry. According to various sources, including Search Engine Land and Wired, Google significantly reduced the appearance of AI Overviews following performance issues and a series of incorrect or misleading answers.

One such instance involved Google sourcing responses to health queries from trusted websites like the Mayo Clinic and CDC but still producing inaccurate information. For example, an AI Overview suggested eating rocks for nutrition or making pizza with glue.

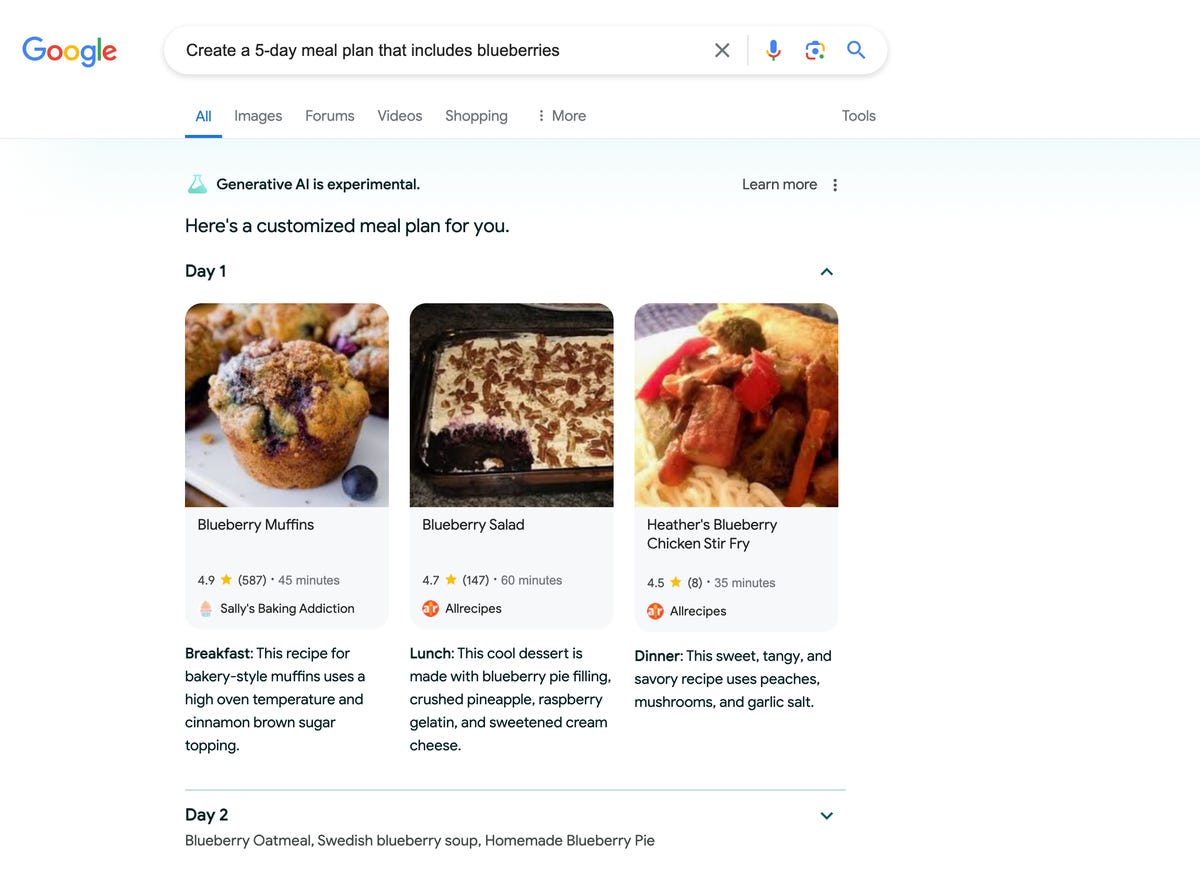

The reduction in AI Overviews occurred around mid-April and continued into May, according to data from BrightEdge. At one point, AI Overviews appeared on over 84% of queries but now only show up for approximately 15%. The drop was most noticeable for queries in the healthcare industry.

Google announced the launch of AI Overviews in the US following numerous incorrect and dangerous answers. Despite this, some users have been unable to turn off AI Overviews through settings. Instead, they have resorted to using different web browsers or the Web tab in Google search results to avoid them.

The controversy surrounding AI Overviews raises questions about the role of technology in journalism and information dissemination. Some argue that it could lead to a loss of depth and nuance in understanding, while others see it as an opportunity for more efficient and convenient access to information.

E.B. White's story 'Irntog' from 1935 warns of a future where people demand increasingly condensed versions of knowledge, leading to a loss of depth and nuance in understanding. Nearly one third of US newspapers have gone out of business since 2005, leaving thousands of communities without a local news source.

Digital subscriptions and digital ads have been growing for some outlets, but Google's AI could reduce the need to click through to original articles, further eroding traffic and revenue for news publishers. It remains to be seen how this trend will impact the future of robust journalism and the media industry as a whole.